Calling cars ‘autonomous’ is putting drivers at risk

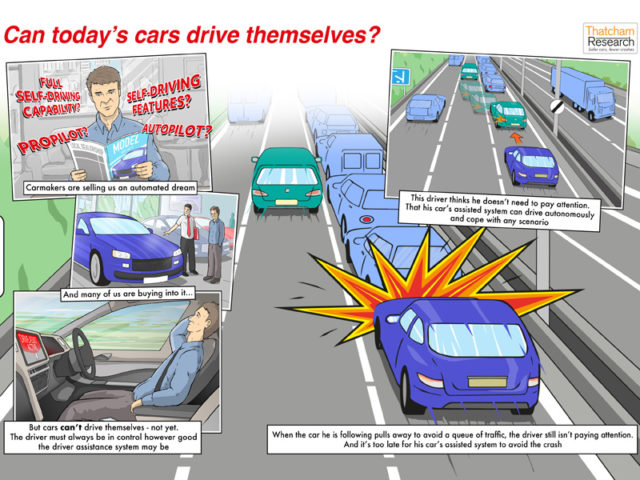

Carmakers should stop misleading drivers by using ‘autonomous’ terms for technology that still requires driver involvement.

Autonomous systems are not as robust or as capable as they are declared to be, says Thatcham Research

That’s according to Thatcham Research and the UK’s motor insurance industry who are trying to clear up confusion that today’s assisted driving technologies are ‘autonomous’ and say that such systems are “still in their infancy and not as robust or as capable as they are declared to be”.

Announced as Thatcham Research and the Association of British Insurers (ABI) launch a new testing regime that will mark down systems using misleading names, the organisations’ ‘Assisted and Automated Driving Definition and Assessment’ paper sets out that names such as Autopilot and ProPilot – used respectively by Tesla and Nissan for their current semi-autonomous tech – are “deeply unhelpful, as they infer the car can do a lot more than it can”.

Thatcham’s head of research Matthew Avery continued: “Absolute clarity is needed, to help drivers understand the when and how these technologies are designed to work and that they should always remain engaged in the driving task.”

Last year saw Avery tell Fleet World in an interview that there should be “customer clarification where, if you’re driving a vehicle with a high level of assistance, we want to make sure the customer knows they are still responsible for keeping themselves and others around them safe”. This includes ensuring naming systems for such technologies do not confuse drivers.

It follows concerns raised by UK insurers and Thatcham that the evolution of automated driving technology could result in a short-term increase in crashes due to drivers thinking their cars are more capable than they are. The group published a paper at the time saying that international regulators need to make a clear distinction between such ‘Assisted’ and ‘Automated’ systems, which it had already said pose a grey area, and setting out 10 criteria that all cars must have before they can be called ‘automated’.

Meanwhile Ford said a year ago that it was skipping plans to introduce partially autonomous, Level 3 cars due to concerns over driver safety. The carmaker’s tests found that engineers testing the semi-autonomous cars – and required to step in if needed – had actually fallen asleep at the wheel, despite the tests deploying stimuli such as buzzers and vibrating seats.

Thatcham Research said the first real-life examples of hazardous situations in such circumstances were standing to happen as it demonstrated what can happen when a motorist thinks a car can drive itself and relies on the technology. It’s also drawn up a list of 10 key criteria that every assisted vehicle must have, complementing the 10 criteria for automated vehicles.

And the organisation has also revealed further detail around a new consumer testing programme, designed to assess assisted driving systems against the 10 criteria. An initial round of tests will take place this summer covering six cars with the latest driver assistance systems – the results will enable final grades to be generated for use by insurers and consumer organisations and will be published in the autumn.

“The next three years mark a critical period, as carmakers introduce new systems which appear to manage more and more of the driving task. These are not autonomous systems. Our concern is that many are still in their infancy and are not as robust or as capable as they are declared to be. We’ll be testing and evaluating these systems, to give consumers guidance on the limits of their performance. The ambition is to keep people safe and ensure that drivers do not cede more control over their vehicles than the manufacturer intended,” said Avery. “How carmakers name assisted systems will be a key focus – with any premature inference around Automated capabilities being marked down. Automated functions that allow the driver to do other things and let the car do the driving will come, just not yet.”

Responding to the comments on the naming and use of Autopilot, a Tesla spokesperson said: “The feedback that we get from our customers shows that they have a very clear understanding of what Autopilot is, how to properly use it, and what features it consists of. When using Autopilot, drivers are continuously reminded of their responsibility to keep their hands on the wheel and maintain control of the vehicle at all times. This is designed to prevent driver misuse, and is among the strongest driver-misuse safeguards of any kind on the road today. Tesla has always been clear that Autopilot doesn’t make the car impervious to all accidents and the issues described by Thatcham won’t be a problem for drivers using Autopilot correctly.”